"Therefore, we need to protect not only the material entity of cultural heritage, but also our current interpretation of it and our connection with it - this is the point we want to express."

Ruishan Wu

The Present in the Future is the Past

The Present in the Future is the Past

Keyi Zhang: Can you tell us about the ideas and meaning behind the AI hand-painted painting project "The Present in the Future is the Past" about Hong Kong?

Ruishan Wu: This project is actually a collaborative project, because my mentor added some of his ideas at the time, and there were other artists in the research of this project at the time, so it is not all my personal ideas. But we all agree on a concept that people's perspective is also part of cultural heritage. Take Hong Kong as an example. Yau Ma Tei is a cultural heritage site in Hong Kong, and there are also places like Admiralty. We selected eight or nine representative places in Hong Kong. We recruited some participants, both locals and visitors, and asked them to use cultural maps to describe their impressions of the past, current connections, and future imaginations of these cultural heritage sites in Hong Kong. Because these places are places where people have lived or been involved, we think that these places are part of this cultural heritage, whether it is the imprint of people's lives, the connection of life, or the imagination of the future. Therefore, we need to protect not only the material entity of cultural heritage, but also our current interpretation of it and our connection with it - this is the point we want to express.

This workshop is mainly to let the generated AI generate people's impressions of cultural heritage sites. In this process, these sites may appear to be older in the past, or they may appear to be more fantasy. There are also some more virtual or non-existent imaginations about the Kowloon Walled City, such as being flooded, or appearing here in a very good, high-end appearance that is different from its original image... In short, there will be all kinds of things. We will find many differences from reality, and these are some interesting points. Then we let the robot perform and draw these impressions in the exhibition in a multimedia-integrated style, and present its physical appearance in 3D by collecting scanned paintings and using AR. In the end, people who visit this exhibition can also see some visualized forms of cultural heritage sites in people's impressions.

KeyiZhang: So this interactive form is more like a text to image, which is equivalent to re-representing or re-visualizing different people's perspectives on the past, present and future of Hong Kong's heritage in a linear way.

Ruishan Wu: Yes, it is to strengthen humanity. For cultural heritage, it is not just a nature or culture, but also some ongoing different understandings or insights. We hope that these contents will be recorded in the present tense of the past in the future and be seen and remembered by people.

But we actually got some feedback about AI ethic before. Because our work is based on practice, as an artist, we first explore some possibilities. But some people may think that there are some moral and ethical issues in it, and we also need to explore them - for example, whether people will find it difficult to distinguish between false and real situations, or what kind of confusion they will face in the middle. But I think these are indeed some issues we need to consider or explore when using GenAI. But I think as an artist, you may need a focus, whether to do practice or to be a critic.

The Feeling of Home

The Feeling of Home

Keyi Zhang: I think this depends more on whether the core of your project is AI as a tool. If it is just one of the tools or a small part, it is not a project that cannot be done without AI. Then I think the focus of this project is obviously not AI. Of course, the tech of this project is also a very good part, but I think if there is no AI in this project, it can also be interpreted very well, so I think this AI ethic may not be a special concern in this project.

Of course, you can also tell us about your views on this thing, that is, the ethical issues between AI and humans. What kind of ideas or attitudes do you have, or how do you want to avoid some problems in your work, or what is it like? Are you willing to share with us?

Ruishan Wu: Because artificial intelligence is used to visualize cultural heritage, there may be some moral and ethical issues. For example, how does the collected data set have those cultural connotations, and then they are in a specific cultural background. Will it distort the relationship between people and culture, and will there be some negative possibilities? Because some people may be visitors, because this result is generated by a dialogue, and then different prejudices may be added to an image, but at the same time we show it to others as a vision. I think this may be a personal problem, and there may be some negative effects that are inevitable, such as conveying some anti-social or anti-human ideas. But in fact, in the final analysis, these are people's ideas in the general environment. So I think it is difficult to answer this question. When we make something, it must have advantages and disadvantages, which requires critical thinking.

But when it comes to art, we can't say that we don't do it because of these critical things, because I remember there is a saying that artists are pioneers of thoughts, but philosophers only put forward those thoughts afterwards, so they are latecomers, but art is pioneers, that is, the ideas that are executed first. So there may be fewer considerations in many aspects, but the first expression will show a more pioneering idea.

Keyi Zhang: Because I think when doing art, as you said, artists are pioneers of thoughts, and art has a lot of intuitive things and intuitive expressions in it. But for philosophers, when they use language to express and summarize something, in fact, any language, whether it is English or Chinese, I think any language is an over simplification of thoughts. It is a flatter, more linear, and less three-dimensional way of expression. So I think I can get what you want to express.

Ruishan Wu: Because AI is so big, you have a lot to say. I remember when we just started our master's program last year, we felt that AI suddenly exploded its potential. With the emergence of models like Midjourney and ChatGPT 3.5, we will find that it has a lot of possibilities. Especially it has begun to get involved in the field of art, expression, and then writing poetry and painting. Many of our chat groups may feel that artistic creation is threatened, or something.

So at that time, my mentor Hector taught us machine learning, which is to let us understand tech, how tech works, what its principles are, and how it can be used from the perspective of being more beneficial to humans and creators, rather than replacing our human expression ability and some creations or some human functions. So at that time I began to pay attention to emotions, and wanted to use emotions as a unique paint or brush for us humans.

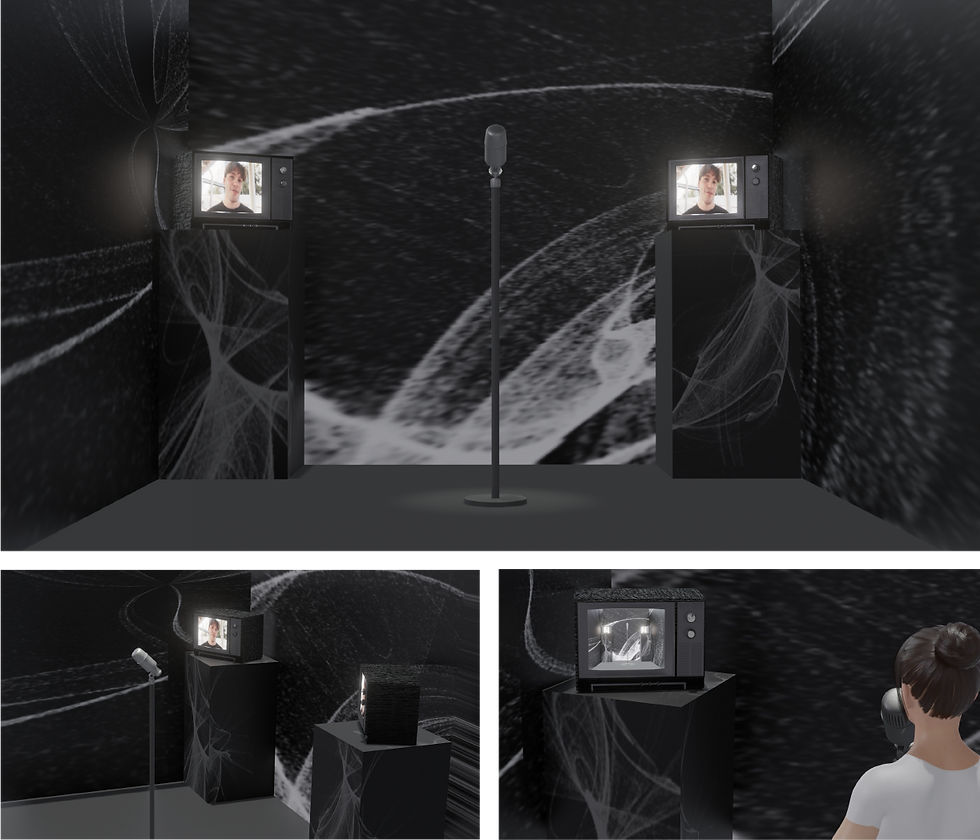

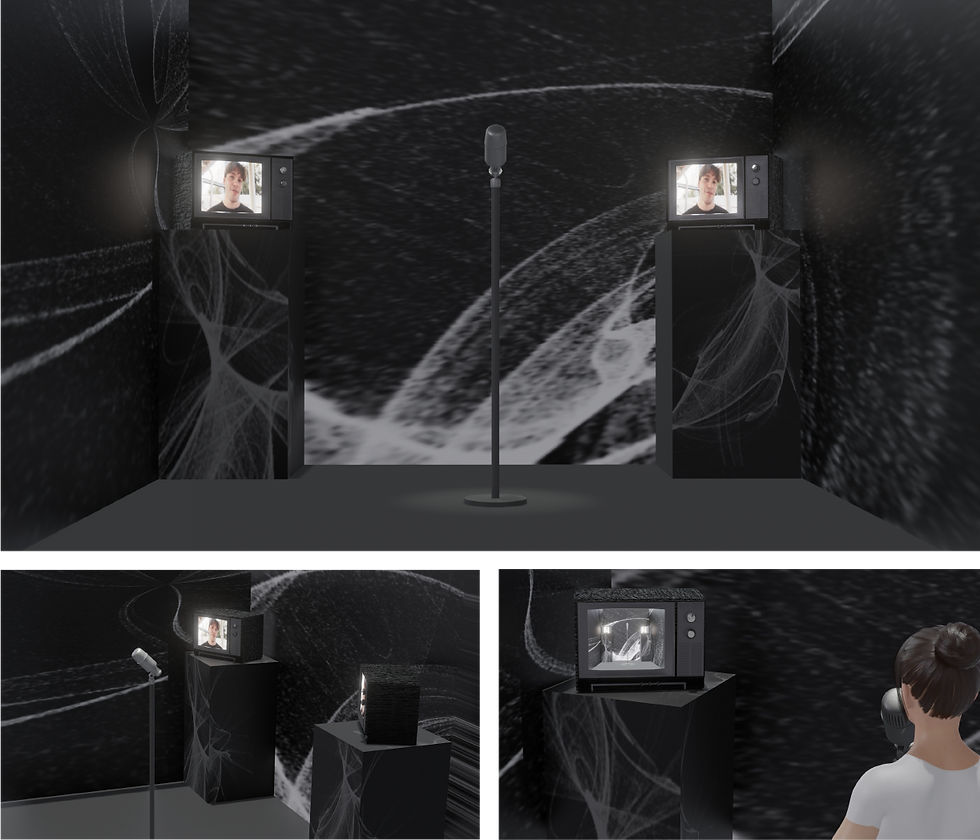

The Feeling of Home

Keyi Zhang: Is that the reason for the "Emotional Echoes" project?

Ruishan Wu: Yes, and the "Digital Garden". And I worked on that project with Chenyu, but ours was just a proposal, and it may not have become a complete work. So at this time, I started to think about some factors that will not replace people with AI, and some subtle connections and irreplaceability in people's cognitive and physiological aspects.

Emotional Echos

Keyi Zhang: Because I just mentioned the inspiration of "Emotional Echoes", and because I really like this work, can you share your entire creative process of this project, including how it was finally exhibited, and what kind of feedback and effects it had in the end?

Ruishan Wu: This work is actually incomplete. I went through a painful iteration at that time, or my initial idea was not to do this thing at all. Because I was studying machine learning at that time, and I was more interested in affected computing, that is, emotional computing. But my mentor thought this was too narrow and standard, and not interesting enough for him.

Because affected computing is an academic term under CS, it is a field that recognizes people's expressions, intonations, voices, and gestures. They recruited some special actors to perform, such as saying something in a very happy tone, saying something in a sad tone, or performing a sad face. They use those datasets to train a model, and then they can identify people's emotions through their facial expressions and speech tones. For example, I may be 40% happy and 30% anxious, and they can score like this.

But my mentor thought this was very narrow, and how to come up with some new understandings or new concepts from an artistic perspective. Because my previous background was design, my perspective at that time was more commercial, that is, I considered some practical uses and business perspectives. But after I came into contact with fine art, and maybe influenced by some teachers, I have an understanding of art, that is, it is not just beautiful, or not just useful, or it may not be useful, but it will have a certain impact on your thoughts or concepts. A professor once said, "Good art makes you feel like your heart has been punched."

So I tried to come up with some more interesting concepts at the time, so I drew these sketches and thought about what kind of feedback I would get. During that time, I couldn't sleep all night, thinking about how to make this thing more interesting lol. So I wanted to compare people and machines at the time, because people are also a bit like a black box. That is, everyone is a black box to others, something that cannot be understood, and we don’t know how the thinking inside is constructed, a bit like we cannot understand how a trained model should work. But how to identify "people"? Maybe physiological signals or some outputs can help you identify. Similarly, machines also have some outputs. If it is a recognition box, it will output some emotional waves. Just like we often say input and output when emphasizing machine boxes, and then we think of some human AI and some visualization.

Then I liked an artist named Anna Ridler very much at that time. Through her series of tulip works and performance art works, I inspired a transformation of multimodal ideas. From body movement to electromechanical recognition, in general, it is input-output, what is the connection between input-output, or the stimulation of synesthesia.

Then I think the input of machines is defined by people, and for humans, there may be a God who defines our input, which is hearing, touch and other things. But because the machine is defined by humans, it can only do so much because of human scientific capabilities. It may be able to hear, but the conversion is actually defined by us humans. To some extent, it is not heard, and it is not so organic.

In general, I think we humans are defining a machine like God, but from ourselves, there may be a higher god defining us. So I think we still have a lot of places to explore. If we explore ourselves as a box, it may be like cognitive science.

I think my emotional echo may still be exploring a feeling of synesthesia. There are some theories, such as not everyone has the ability of synesthesia, for example, when you see a color, you will think it is a sound, or when you hear a sound, you will imagine a picture.

Keyi Zhang: So emotional echoes is exploring the concept of synesthesia?

Ruishan Wu: Yes, that's right. It is emotional echo. First, people hear the sound of each symphony. People can see a ring on the big screen. Its wave ring vibrates according to the frequency of the symphony. That is, the ring will emit some particles, and its number and the appearance of the wave ring will depend on the frequency of the original audio. For example, it may reach a certain threshold before the wave ring appears, and at a certain threshold, its number may be more or less. Then another screen below shows another ring, which reflects the GSR signal of the person. GSR collects the skin telecommunication number. If you have a particularly large emotional fluctuation, you are particularly nervous, or particularly excited or something, you will sweat, and then the electronic conductivity of your skin will increase, and then a spectrum will be generated, and then I use this spectrum to generate the ripples of another ring.

So the whole device is that the viewer will see the first ring, which is the excitement of the music itself - the visualization of the music, and the ring below visualizes the physiological signals of the viewers, which can also be understood as the visualization of emotions. Then in this process, we can observe people's reflections, such as whether they will try to keep up with the vibration of the music, or whether some people will not have a big emotional fluctuation according to the music. How should I put it? It is an experimental art of observation, just to see how they react.

Digital Garden

Digital Garden

Digital Garden

Keyi Zhang: But I think this project is very interesting, because you just said that people are more like black boxes, and it is equivalent to inputting music to people, and then outputting their emotions, and both are visualized. So I think this project is Yes, it is very interesting. Your blackboard is a machine box and a human box. It feels more like giving the input to the human, and then looking at the output of the human's emotions, and visualizing them together. I think this is a very good concept and a very good materialization. I mean the final installation effect, which I think is super good. Can you share "Digital Garden" with us?

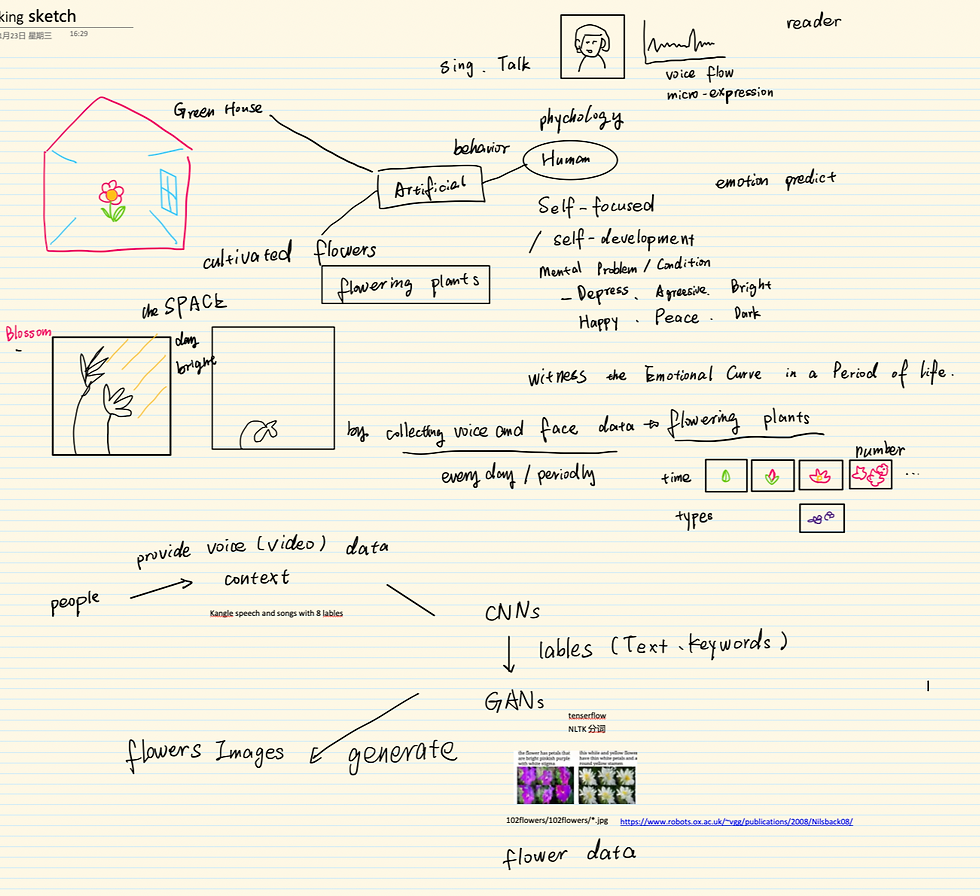

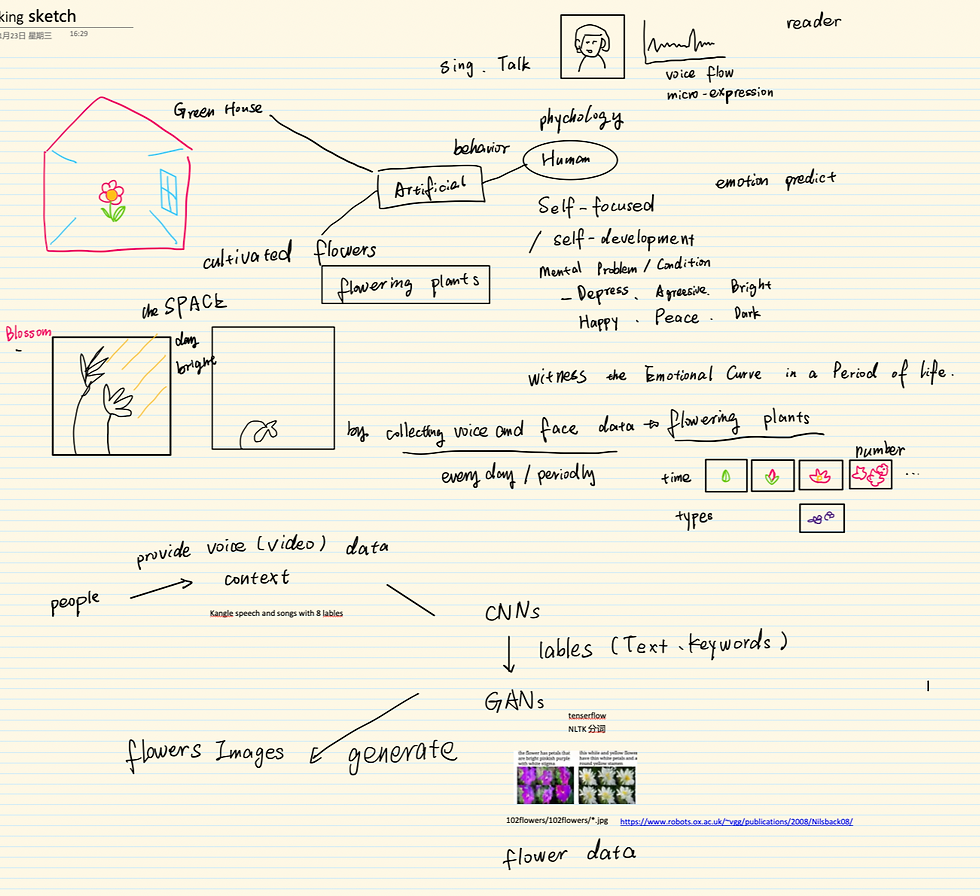

Ruishan Wu: Actually, my two projects should be connected. "Emotional Echoes" is to turn the physiological signal into sound. When you make an action, do you think about what sound it is or what it is? It's this kind of thinking. "Digital Garden" is actually a process of visualizing the psychological state of people, and it also involves emotional computing. Later, I found that what I was doing was mapping. For example, "Digital Garden" is about what kind of emotion is mapped to what kind of performance. Many times, this may be an artist's thinking, what kind of color do you choose to express, what kind of form do you express. But this is called mapping in CS, and they study this "mapping" as a separate thing, including neural networks, which are actually composed of many, many mappings.

I mapped the recognition of people's emotions and sounds to the texture of flowers and grass growth in this space, the color and brightness of light, etc. This is my previous idea and the rough effect that was realized later. Then the source of my inspiration is Scott Hessels' work Brakelights, a film narrative based on real-time traffic flow. He filmed 200 random conversations between a man and a woman in five different emotions-anger, annoyance, indecision, hope and love. I hate you to I love you, and everything in between. He took a video of the two people from talking to quarreling to reconciliation-the camera was placed on the brake lights of a traffic jam in Los Angeles at night, and the computer program matched the brightness of the red to a specific type of dialogue line. When the traffic jam, the red becomes brighter, and the couple starts to fight. When it starts to flow again, they reconcile and fall in love again.

My approach may be the opposite of his, and the content may focus more on self-report, people's inner thoughts and daily mental state.

Chenyu Lin: So is this based on this video, and then the flower will change, or when the audience interacts, the flower will change, or is it text to image?

Ruishan Wu: This texture is still from StyleGAN2. Because I have experience with StyleGAN2 before, and then I trained some abstract atlases. This kind of abstract atlas needs to add a clip, and the clip is that it understands some images and outputs a word. But I can use it in reverse, so I can actually input some emotions and their values, and then it will output such an abstract image, and finally I will put it on this flower, but it has not yet achieved a real-time effect.

INTERVIEWER: KE ZHANG

CURATOR: KE ZHANG, CHENYU LIN

EDITOR: KE ZHANG, CHENYU LIN

GRAPHIC DESIGNER: VIVI SHEN

Ruishan Wu was born and raised in Hangzhou and now lives in Hong Kong. She obtained a bachelor's degree in industrial design and a master's degree in creative media arts from City University of Hong Kong, and is about to go to Canada to pursue a doctorate. Her professional fields include digital vision, hardware manufacturing, and user experience. She is particularly interested in machine learning, data visualization, and human-computer interaction. With a unique combination of technical skills and artistic vision, she creates works that constantly push boundaries and innovate to engage audiences.